You know when someone walks into the middle of a conversation and says something that makes no sense?

That is exactly what happens when AI doesn’t get the full context.

Context Engineering is a brand-new term gaining popularity in the AI world. AI tools such as ChatGPT work by responding to your input, but their memory is different from compared of humans. If you do not provide them with the full information, the model starts to guess, and guessing leads to wrong answers.

So, what is context engineering? Context engineering is a way of organizing the background so that the AI understands what you are asking about. Instead of adding random data to the model, context teaches what matters and what doesn’t, and what should come first.

Today, we will explain how context engineering in AI works, how it compares to prompt engineering, and why it’s key to getting better results. Let’s break it all down, shall we?

What Is Context Engineering?

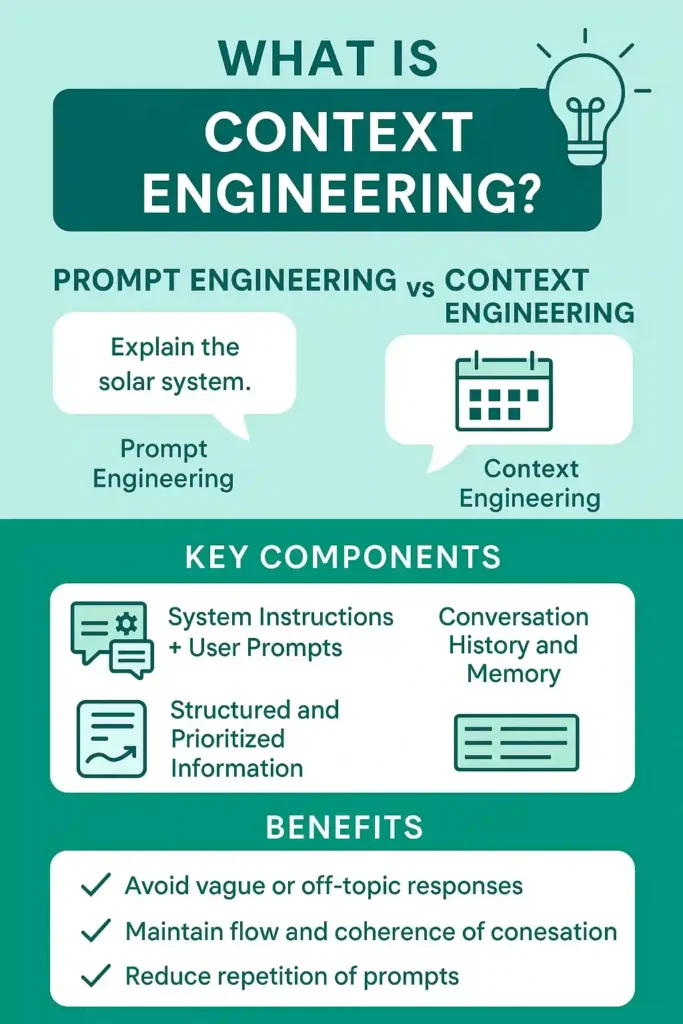

Context engineering is all about providing AI the right background information before asking it to respond. It is different than prompt engineering as in prompt engineering you expect the AI model to figure things out in a single prompt. While in context engineering you give the details to AI that the AI needs to know in advance, so there is no need for AI to guess. By doing this AI doesn’t guess and even responds with clarity. When it is done right, it feels like talking to a friend who already knows everything.

Here’s what it usually involves:

- Always add the correct information so that the AI understands the task.

- Removing anything unnecessary can confuse the model.

- Making the input clear and simple for the AI to understand.

- Keeping it brief and targeted to keep to memory/token limits.

- Frequently updating the context to ensure that responses remain precise and relevant.

Why Context Matters More Than You Think

Talking to AI without any context feels like starting a conversation with someone who just joined mid-sentence. They will misunderstand you even if you are smart. The difference between AI and humans is that humans can rely on memory and shared history but the same cannot be said for AI.

The key difference between humans and AI is:

- Humans can guess on past experience.

- AI only knows what you give it. After that it guesses.

Without providing the right setup, AI models give answers that may be vague, wrong or completely off topic. That is where context engineering in AI steps in, by providing the right background info you can make your conversation more natural, avoid repetition of content. Help the model in maintaining the flow and also reduce randomness in its replies.

In short context engineering in AI helps conversation feel less robotic and more like talking to someone who already knows about the topic.

Key Components of Context Engineering

In order to assist AI to respond in a smart, focused way, we do not just give it a single prompt; we build a layered setup, which is called context. These layers give the model all the background information that it requires in order to understand your request correctly.

System Instructions + User Prompts

This is the beginning point, system instructions set the behavior, such as “act like a helpful AI assistant,” while the user prompt tells the AI what to do. But they don’t carry enough meaning on their own without extra layers of information.

Conversation History and Memory

In order to keep the chat on track, context even includes previous interactions. If you asked something five minutes ago and now you are following up, the AI needs to remember what was said before to stay relevant.

Structured and Prioritized Information

Every AI model have limits (called token limits). That is why we can’t send everything. LLM’s have fixed context windows (e.g. 8k,32k or 128k) which limits the amount of data that can be processed at once. Context engineering helps select and compress the data.

- Chunking: breaking long documents into small and readable bits.

- Summarizing: keeping the core ideas.

- RAG (Retrieval-Augmented Generation): finding and injecting only what’s needed from a database.

The Rise of Context Engineering in 2025

It was not until recently that most developers were focused on writing better prompt or fine-tuning models. But by mid-2025 a change happened, people started to realize that context was the real game-changer.

One moment that sparked wider interest was a post shared by Shopify’s CEO, Tobi Lutke on June19 2025. He shared about how context engineering, not prompt engineering made his company’s AI systems smarter. This post started a wave of interest across social media such as LinkedIn and Medium.

There are a few reasons for this hype.

Rise of AI Agents and Token Limits

As AI agents are getting more advanced, people are hitting token limits faster. Models like GPT-4o and Claude couldn’t keep everything in memory. Developer requires ways to filter, compress, and prioritize data fast.

Shift from Fine-Tuning to Context Manipulation

Retraining a model is costly, which is why many teams have shifted from retraining the model to shaping context instead. This meant updating the input data and not the model itself. Which resulted in a faster improvement and more control.

New Tools Like LangChain and LlamaIndex

These tools made it easy to manage context layers, as these tools can pull data from databases, summarize long files, and inject them into prompts at the right time. These tools make context engineering easier, even for non-experts.

As the conversations around context engineering and prompt engineering got louder. Developers saw that a good context often solved more problems than the perfect prompt ever could. That is why context engineering moved from a niche idea to a standard practice in 2025.

Context Engineering vs Prompt Engineering

It is very easy to get confused between the two, but they are not the same thing. Both prompt engineering and context engineering work differently when working with AI.

- Prompt engineering focuses on the exact words you use to ask a question or a command.

- Context engineering creates a whole scene around that prompt, so the AI does not have to guess anything because it has all the information that it needs.

Think of it like this:

- A prompt is: “Tell me about my calendar.”

- Context is: your actual calendar events, time zone, priorities, and preferences.

A prompt = instructions, whereas context = setup. Without the right context, even the best-written prompts fail. This is one of the key differences between context engineering and prompt engineering. One gives direction, and the other gives understanding.

Large AI teams use prompt engineering frameworks to test and improve instructions in order to get better results, while roles like prompt engineers and AI interaction designers focus on crafting prompts that match the user’s intent.

You need both in order to get the desired response, but context is what helps AI act less like a robot and more like a helpful assistant.

How Context Engineering Works: Practical Strategies

Context engineering is not just a one-time setup. It is a repeatable, flexible process that improves over time. You build a structure and see how well the AI responds, then refine what works and remove what doesn’t.

Build, Test, Refine

Even the best systems treat context engineering as an iterative process. You try different context inputs and check how well the AI responds, then refine the model for accuracy and speed.

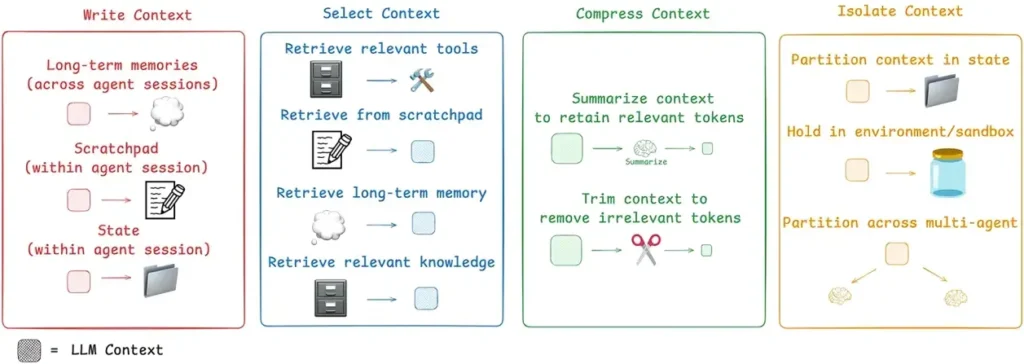

Context Writing

Context writing means storing useful information outside the model’s immediate memory, such as facts, past chats, or user settings. You bring the past details only when needed, as it also reduces token use and keeps the response precise.

Context Selection

AI doesn’t know everything; it understands only the right stuff. This step mainly focuses on the most relevant chunks of information (from files, chat history, or databases) to use in the prompt.

Context Compression

Sometimes, even the relevant information is too long. Context compression creates summaries that keep the meaning but reduce the length. This helps stay within token limits while keeping the model informed.

Context Isolation

When multiple AI agents are working together (multi-agent systems), they can get confused with each other. Context isolation keeps one in its own ‘space’. It helps each agent focus only on the information it needs, nothing more.

Photo taken for reference from Google.

Real-World Examples

Modern AI models are not perfect; they don’t rely on wording alone. Developers use advanced techniques to create systems that provide better results by focusing on structure, memory, and meaning. These tools and methods help manage context engineering and prompt engineering effectively.

- Prompt Templates: Formats such as “Role: [Role], Task: [Task], Context: [Data]” to guide the AI model more clearly.

- Vector Databases and Embeddings: Tools such as Pinecone or Weaviate find and bring in relevant documents based on meaning, not just keywords.

- RAG Pipelines: Frameworks such as LangChain and LlamaIndex let AI gather external information in real time as required.

- Memory Modules: AI uses short-term memory to store ongoing conversations and long-term memory to pull up earlier user preferences.

These real-world applications show how context engineering in AI results in smarter, more useful applications, particularly when speed and accuracy are most important.

Challenges and Pitfalls

Even though context engineering in AI makes systems smarter but it comes with its own set of issues. If these issues are not handled with care, the same context that helps the model can also hurt it.

Some of the main challenges are:

- Noisy or Outdated context: giving the AI model irrelevant or old information can confuse the AI model. This is known as context poisoning. There is a big chance that the AI might focus on the wrong part or mix up important details.

- Distraction or confusion: If the input includes unrelated or conflicting data, the model might give vague or misleading answers.

- Token Limits: A Large language model can only process a fixed number of tokens at once. The important part is knowing what to include and what doesn’t. Adding too much irrelevant data can cut off the important parts.

- Balancing completeness and size: Giving the model enough data to be helpful without going over its memory limit is challenging. This is where strategies like content prioritization, summarization, and chunking are useful.

Good context engineering requires constant testing to avoid these issues. If developers don’t structure things carefully, it can do more harm than good.

Let’s Wrap it Up

To put it simply, context engineering is a simple but powerful way of giving AI the background it requires to respond accurately. Instead of only relying on well-written prompts, context engineering creates a full scene around the task so that there is no need for AI to guess.

The difference between context engineering and prompt engineering is clear: prompts give direction while context gives meaning. Without a solid context, even the best prompts fail. Context engineering in AI is not just a technique; it is the base of smarter, more useful conversations.