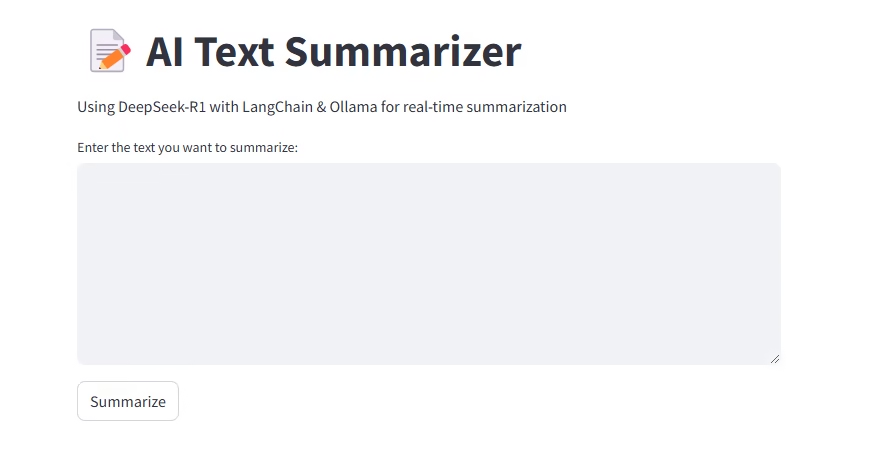

In this blog, we’ll build a real-time text summarizer using DeepSeek-R1, LangChain, and Ollama, all integrated into a Streamlit app. The goal is to stream responses in real-time so users can see the summary being generated as it happens.

Why Use Ollama and DeepSeek-R1?

Ollama provides local access to powerful AI models like DeepSeek-R1, eliminating the need for cloud-based APIs. DeepSeek-R1 is a strong open-weight LLM, great for tasks like text summarization.

Setting Up the Project

1. Install Dependencies

pip install streamlit langchain-ollama

2. Start Ollama and Download the Model

ollama serve

ollama pull deepseek-r1:1.5b

3. Create the Streamlit App (app.py)

The following code initializes a LangChain Ollama model, sets up a streaming response, and displays the summary in real-time in the Streamlit UI.

import streamlit as st

from langchain_ollama.chat_models import ChatOllama

from langchain.schema import HumanMessage

st.set_page_config(page_title="Text Summarizer", layout="centered")

st.title("📝 AI Text Summarizer (Streaming)")

st.write("Using DeepSeek-R1 with LangChain & Ollama for real-time summarization")

user_input = st.text_area("Enter the text you want to summarize:", height=200)

llm = ChatOllama(

model="deepseek-r1:1.5b",

temperature=0.7,

base_url="http://localhost:11434",

streaming=True,

)

def generate_summary(input_text):

messages = [HumanMessage(content=f"Summarize the following text:\n\n{input_text}\n")]

for chunk in llm.stream(messages):

yield chunk.content

if st.button("Summarize"):

if user_input.strip():

with st.spinner("Generating summary..."):

summary_placeholder = st.empty()

summary_text = ""

for chunk in generate_summary(user_input):

summary_text += chunk

summary_placeholder.markdown(summary_text)

else:

st.warning("Please enter some text to summarize.")

How It Works

- User inputs text for summarization.

- The LangChain Ollama model (

DeepSeek-R1) processes the input. - The summary streams in real-time as it is being generated.

Running the App

Start the Streamlit app with:

streamlit run app.py

Now, you’ll see the summary update dynamically instead of waiting for the full response! 🚀

Conclusion

With Ollama, DeepSeek-R1, and LangChain, we created a real-time AI summarization app that runs locally. This is a great way to build efficient, interactive AI applications without relying on external APIs.

Would you like to see more AI-powered Streamlit apps? Let me know in the comments! 😊

Pingback: How to Delete DeepSeek Account Permanently (PC & Mobile Guide 2025) - Noor Blogs