Microsoft has recently launched a new language modelling approach for text-to-speech synthesis (TTS) called VALL-E (short for Voice DALL-E). TTS refers to generating spoken language from written or typed text. VALL-E is a neural codec language model, which means it has been trained to encode and decode spoken language using discrete codes derived from an off-the-shelf neural audio codec model.

VALL-E Model Diagram

Traditionally, TTS systems have approached the task of synthesizing speech as a continuous signal regression problem, in which the goal is to predict the appropriate waveform for a given piece of text. However, the VALL-E team has taken a different approach, treating TTS as a conditional language modelling task instead. In other words, VALL-E has been trained to generate spoken language based on a given set of text inputs, rather than trying to predict the exact waveform of the resulting speech.

One of the critical features of VALL-E is that it has been trained on a massive dataset of English speech, totalling 60,000 hours of audio. This is hundreds of times larger than the datasets used to prepare most existing TTS systems, and it has allowed VALL-E to emerge with in-context learning capabilities. This means that VALL-E is able to synthesize high-quality personalized speech based on just a 3-second recording of an unseen speaker’s voice as an acoustic prompt.

In order to evaluate the performance of VALL-E, the team conducted a series of experiments comparing it to the state-of-the-art zero-shot TTS system. Zero-shot TTS refers to the ability of a system to synthesize speech for a new speaker without any prior training data for that speaker. The results of these experiments showed that VALL-E significantly outperformed the existing system in terms of speech naturalness and speaker similarity. In other words, the speech generated by VALL-E was more lifelike and more accurately resembled the voice of the speaker in the acoustic prompt.

In addition to its superior performance in terms of speech quality, VALL-E was also able to preserve the speaker’s emotion and acoustic environment in the synthesized speech. This is a notable achievement, as most TTS systems struggle to accurately capture these elements of speech.

Read More: Uses cases of ChatGPT

It is worth mentioning that individuals who have lost their ability to speak may be able to “talk” again using this text-to-speech method if they have previous recordings of their own voices. In the past, a Stanford University Professor named Maneesh Agarwala stated that they were working on a similar project, in which they planned to record a patient’s voice before surgery and then use that pre-surgery recording to convert their electrolarynx voice back into their pre-surgery voice.

Features of VALL-E:

Synthesis of Diversity:

VALL-E is a language model for text-to-speech synthesis that is capable of generating diverse personalized speech samples for a given pair of text and speaker prompts. This is made possible by VALL-E’s use of sampling-based methods to generate discrete tokens. Essentially, these methods allow VALL-E to synthesize multiple different versions of the same input text by using different random seeds. This means that given a piece of text and a speaker prompt, VALL-E can produce a range of personalized speech samples that vary in terms of the specific words and phrases used, as well as other factors such as intonation and rhythm. The ability to synthesize diverse personalized speech samples is a unique feature of VALL-E and sets it apart from other text-to-speech systems that are only capable of generating a single version of the output speech for a given input.

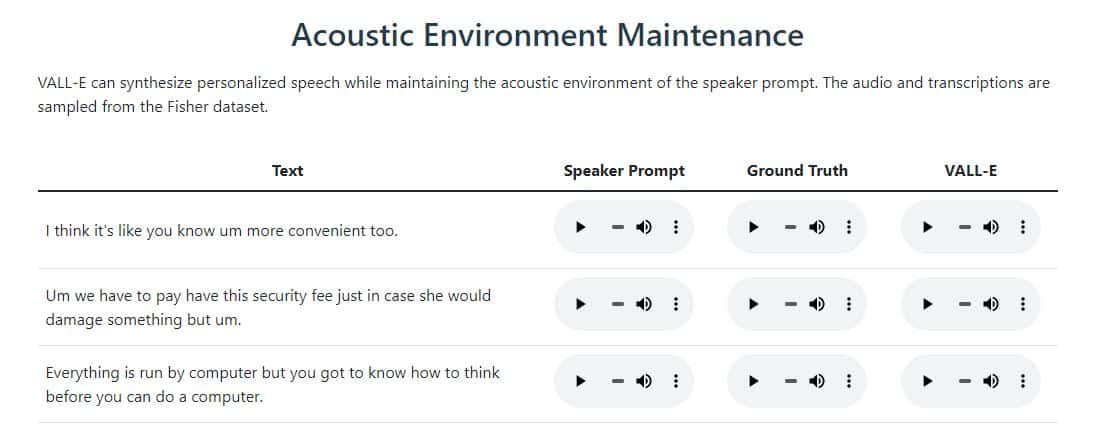

Acoustic Environment Maintenance:

VALL-E is a TTS that can generate personalized speech while preserving the acoustic characteristics of the speaker’s voice. This is achieved by training VALL-E on a large dataset of audio and transcriptions, allowing it to recognize and replicate the acoustic environment of different voices.

Speaker’s emotional maintenance

VALL-E is a TTS that can generate personalized speech while preserving the emotional tone of the speaker’s voice. This is made possible by VALL-E’s training on audio prompts sampled from the Emotional Voices Database, which includes a range of emotional speech samples labelled with corresponding emotion labels. By learning to recognize and replicate the emotional characteristics of different voices, it is able to generate personalized speech that accurately reflects the emotional tone of the speaker’s prompt. This capability sets VALL-E apart from other text-to-speech systems that may struggle to accurately capture the emotional content of speech.