OpenAI Token Counter For AI Models

Discover the Magic of Token Counter Online Tool! It works just like GPT-4 and GPT-3.5-turbo (ChatGPT) tokenizers. Copy and paste your text, and it’ll count tokens, words and characters in it. That way, you’ll know if you’re going over the limit! It also works well with all other LLM models to count their tokens…

What Are OpenAI GPT Tokens?

OpenAI GPT tokens are units of text that a language model uses to process and understand language. These tokens can represent individual words or smaller units like characters or subwords. Understanding tokens is crucial when working with OpenAI’s language models because they affect cost, response times, and the maximum input length for text.

Here are some key points about OpenAI GPT tokens:

- Tokenization: Tokenization is the process of breaking down a text into these individual units or tokens. For example, the sentence “ChatGPT is great!” might be tokenized into six tokens: [“Chat”, “G”, “PT”, ” is”, ” great”, “!”].

- Counting Tokens: It’s important to count tokens when using OpenAI’s API because you’ll be billed based on the number of tokens processed. You can check the token count of an input text using OpenAI’s tools or libraries.

- Maximum Limit: OpenAI models have a maximum token limit. If your text exceeds this limit, you’ll need to truncate or omit some parts to fit within the model’s constraints.

- Cost and Processing Time: The more tokens you use, the more it will cost and the longer it will take for the model to process the text.

- Token Efficiency: To optimize token usage, you can remove unnecessary spaces, use shorter synonyms, or truncate less important text parts.

- Special Tokens: In addition to regular tokens, some models have special tokens like [CLS] and [SEP], which have specific roles in certain tasks.

How to use OpenAI Token Counter?

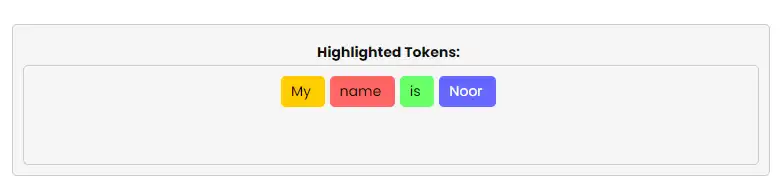

This tool is super easy to count the tokens of language models like chatgpt or GPT-4. You just need to paste your text and it it automatically shows the token count, character count and word count. Here is also a display of tokens in different colours to make it visible to the users.

Step 1: Enter your text/ Paste your text into the text area.

Step 2: Within a second all the calculations will be done and the token counter with show the results and highlighted tokens.

How Can I Count Tokens?

To determine the precise token count within your text, simply run your text through a tokenizer. This tool will automatically count the tokens for you. Worried this sounds too technical? No need to worry! It’s as straightforward as copying and pasting your text into our Gpt token counter located above.

Words vs. Tokens in Different Languages

In the world of language and text analysis, it’s important to understand how different languages handle words and tokens. Every language has a different word-to-token ratio Here’s a straightforward breakdown:

English:

- In English, we often find that 1 word is approximately equal to 1.3 tokens.

- This means that if you have a sentence with 10 words in English, it might be counted as around 13 tokens when using token-based analysis.

Spanish and French:

- Spanish and French operate a bit differently.

- In these languages, 1 word is roughly equivalent to 2 tokens.

- So, if you have a sentence in Spanish or French with 10 words, it would typically be counted as about 20 tokens in token-based analysis.

Understanding this word-to-token ratio in different languages is essential because it helps us accurately process tokens and analyze text. It’s like knowing the rules of the game for each language, ensuring that we get our language processing right.

How Many Tokens Are Punctuation Marks, Special Characters, and Emojis?

In English and many other languages, punctuation marks, special characters, and emojis are typically treated as separate tokens by natural language processing models like GPT-3.5. Each of these elements is considered a token because they carry meaning and are important for understanding the context of a sentence or text.

You can also explore how ChatGpt Works and what the use cases of chatgpt in my blogs.

Here are some examples of how punctuation marks, special characters, and emojis are treated as tokens:

- Period (.) – One token.

- Comma (,) – One token.

- Exclamation mark (!) – One token.

- Question mark (?) – One token.

- Colon (:) – One token.

- Semi-colon (;) – One token.

- Hyphen (-) – One token.

- Apostrophe (‘) – One token.

- Ampersand (&) – One token.

- Dollar sign ($) – One token.

- Emojis (e.g., 😃) – Each emoji is typically treated as one token.

So, for example, if you have a sentence like “Hello, world! 😃”, it would be divided into five tokens: “Hello”, “,”, “world”, “!” and “😃”.

Keep in mind that tokenization rules may vary depending on the specific tokenizer or natural language processing library being used, but in general, these elements are treated as separate tokens because they contribute to the structure and meaning of the text. Use our token counter to get the exact number of tokens like the open AI token counter but with an additional word counter.